If you’ve been following the rise of AI copilots and browser agents, you might have noticed a big missing piece: how do these agents actually do things inside a web app without resorting to brittle click simulation or screen scraping?

Two new ideas aim to fix that: MCP (Model Context Protocol) and its browser-friendly sibling WebMCP.

In this post I’ll explain what they are, why static metadata isn’t enough, how the protocol works, and show real code so you can see what an agent actually does.

The Problem Today

Web apps are interactive and stateful.

AI agents want to help us: “create an invoice,” “filter results,” “upload a file.”

But right now, they mostly simulate user clicks or parse the DOM. That’s brittle, breaks when the UI changes, and can’t safely reuse your logged-in state.

We have metadata standards (SEO tags, JSON-LD, schema.org) and accessibility APIs (ARIA). They’re great for describing pages, but they’re static and read-only.

We need a way to expose real functions to agents.

Enter MCP (Model Context Protocol)

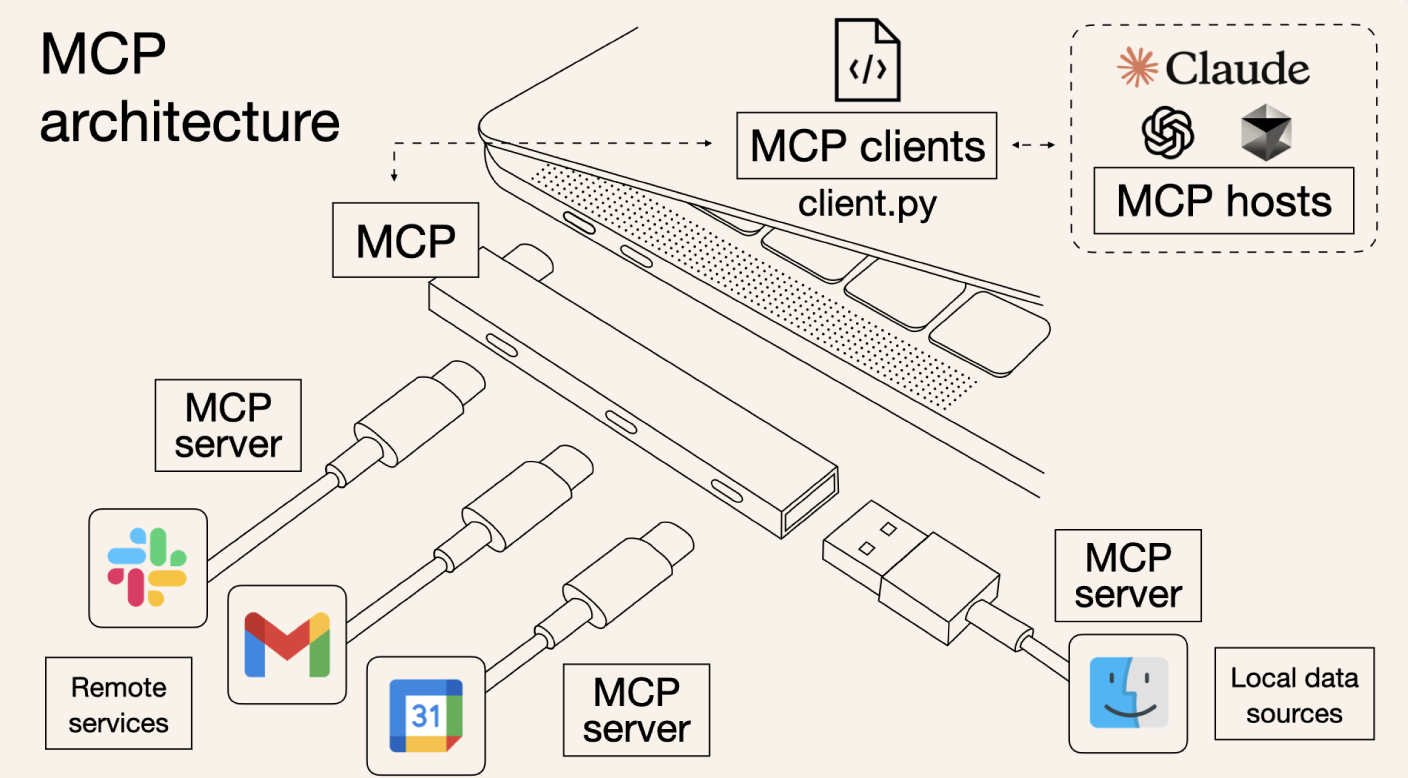

MCP is a formal, open protocol that lets an application expose tools (functions) to an AI agent in a structured way.

Think of MCP as USB for AI agents:

- It defines standard messages (requests, responses, events).

- It defines verbs like listTools and invokeTool.

- It uses JSON Schema to describe each tool’s inputs and outputs.

The agent doesn’t guess — it asks for a list of tools, sees their schemas, and calls them like an API.

A Tiny MCP Conversation

// Agent → App

{

"type": "request",

"id": "1",

"method": "listTools",

"params": {}

}// App → Agent

{

"type": "response",

"id": "1",

"result": {

"tools": [

{

"name": "getPageInfo",

"description": "Get the current page's title and URL",

"inputSchema": { "type": "object", "properties": {} }

}

]

}

}// Agent → App

{

"type": "request",

"id": "2",

"method": "invokeTool",

"params": {

"name": "getPageInfo",

"arguments": {}

}

}// App → Agent

{

"type": "response",

"id": "2",

"result": {

"content": [

{ "type": "text", "text": "{\"title\":\"Dashboard\",\"url\":\"https://example.com\"}" }

]

}

}This format is defined by the MCP spec — every MCP-capable agent and app speaks this same language.

Spec link: https://modelcontextprotocol.io/spec

Where WebMCP Fits

MCP itself doesn’t say how messages travel. It’s transport-agnostic — could be WebSocket, stdio, whatever.

WebMCP (sometimes called MCP-B) is a browser transport binding: it lets your web page run an MCP server in the page itself using postMessage. This way:

- Your page exposes tools directly.

- Agents running as browser extensions or overlays can discover and call them.

- Calls happen with the user’s existing auth/session, securely in their own browser.

Why Not Just Metadata or ARIA?

- Static metadata (JSON-LD, meta tags) Great for describing content to search engines. But can’t run live code, use the logged-in user’s session, or return fresh data.

- ARIA and accessibility attributes Help screen readers, but still require simulating clicks or typing. Agents would be fragile if the UI changes.

- MCP tools are durable APIs You expose createInvoice() or filterTemplates() directly. Agents call them like RPC, independent of UI markup.

- Security & auth The call happens in the user’s authenticated page context — no need to share tokens with an external service.

Real Code: Exposing a Tool in the Browser

Install the libraries:

npm install @mcp-b/transports @modelcontextprotocol/sdkDrop the server code into your app and you’re ready to expose tools.

import { TabServerTransport } from '@mcp-b/transports';

import { McpServer } from '@modelcontextprotocol/sdk/server/mcp.js';

const server = new McpServer({ name: 'demo-app', version: '1.0.0' });

server.tool(

'getPageInfo',

'Get the page title and URL',

{},

async () => ({

content: [{ type: 'text', text: JSON.stringify({ title: document.title, url: location.href }) }]

})

);

server.tool(

'createInvoice',

'Create a new invoice',

{

customerEmail: { type: 'string', format: 'email' },

items: {

type: 'array',

items: {

type: 'object',

properties: {

description: { type: 'string' },

amount: { type: 'number' }

},

required: ['description', 'amount']

}

}

},

async ({ customerEmail, items }) => {

const resp = await fetch('/api/invoices', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ customerEmail, items })

});

const data = await resp.json();

return { content: [{ type: 'text', text: `Invoice created: ${data.id}` }] };

}

);

server.connect(new TabServerTransport());Once this script runs, an agent in the same tab (via extension) can:

- Discover the server.

- Call listTools.

- Invoke createInvoice with JSON args.

- Get a structured result.

Why This Matters

- Stable for developers — you expose clear functions instead of hoping DOM stays the same.

- Safer for users — agent runs inside their logged-in session; no need to share tokens.

- Richer AI experiences — instead of “clicking around,” agents can perform meaningful, complex tasks.

If we want a future where AI assistants really help us on the web, MCP + WebMCP is the missing API layer.

Takeaway

Think of MCP like a universal connector for AI → apps.

Think of WebMCP as the browser plug that lets your web app join that ecosystem.

When you’re building a modern web app and want it to be agent-friendly, you’ll soon be able to simply:

server.tool('whateverYouWant', 'Human friendly description', schema, handler);…and every compliant AI agent will know exactly how to talk to it.