There is a quiet revolution happening under the hood of AI. We are shifting from asking models questions toward letting agents act on our behalf. In this shift, the web, that familiar space built for humans, must evolve too. This is where the idea of an agentic web comes alive, and where WebMCP becomes a bridge between agentic intelligence and the real-world web.

What Is the Agentic Web?

By “agentic web,” I mean an internet where AI agents are autonomous, goal-oriented systems. They do not just read pages but also interact, execute tasks, and collaborate across services. The web becomes a space not only for humans and browsers but also for agents.

This is more than simply adding AI on top of websites. It is a shift from humans doing everything to delegating some work to intelligent systems. Agents can traverse websites, invoke actions, orchestrate workflows, and maintain shared state with users.

The paper Agentic Web: Weaving the Next Web with AI Agents describes it across three dimensions: intelligence, interaction, and economics. The future web may not just be a network of documents but a network of tools, protocols, and delegated tasks.

Why Today’s Web Fails Agents

When you ask an LLM-based agent to interact with a site today, you hit several problems:

- UI is opaque Agents parse raw HTML, guess which buttons matter, and derive semantics from visual markup. It is fragile.

- Context is lost State does not travel. Agents re-derive context every time they refresh or navigate.

- Inefficiency Sending full-page snapshots or screenshots is expensive and slow.

- Security gaps If an agent can click and submit, how do we stop it from causing harm?

In short, the web was built for humans. For agents, it is noisy, ambiguous, and costly.

WebMCP: A Bridge for Developers

WebMCP solves this by letting developers explicitly expose tools as JavaScript functions with clear schemas that agents can call directly. It makes parts of the UI machine-readable without needing to rebuild backends.

How it works:

- The page registers named tools with descriptions and input/output schemas

- The agent discovers available tools via the Model Context Protocol (MCP)

- The agent calls a tool with structured arguments

- The page runs it in context and returns structured results

This approach makes agent work cheaper and more reliable. Tests show WebMCP can cut agent processing by about 67 percent while keeping accuracy high. Developers keep control of what actions exist and how they behave.

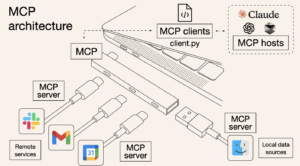

MCP: The Standard Underneath

The Model Context Protocol (MCP) defines how agents talk to external tools and data sources. An MCP server exposes actions; an agent acts as a client that queries and executes them.

WebMCP extends this idea into the browser. A page can act like an MCP server and offer its actions directly to agents. For developers, this means your web app can be an active participant in the agentic ecosystem without rewriting APIs.

Challenges and Risks

The move toward an agentic web brings serious challenges.

1. Permissions and trust

You must choose what to expose and under what conditions. A bad setup could let untrusted agents do harm.

2. Prompt injection and naming attacks

Agents may be tricked into calling dangerous functions or using fake tools.

3. State conflicts

Multiple agents and users can cause inconsistent state if actions are not carefully designed.

4. Data leaks

Even simple read tools can reveal sensitive information.

5. Maintenance burden

Every tool you expose becomes part of your API surface. You must version and test it.

Designing for Agents

Some guiding principles I find useful:

- Expose as little as possible and start small

- Use clear schemas for all inputs and outputs

- Add validation and safety checks around every action

- Keep a human approval path where it matters

- Make actions idempotent or easy to roll back

- Plan for versioning and future compatibility

What Excites Me

- WordPress and WebMCP: imagine agents creating, editing, and managing content natively

- Cross-site agent workflows: agents hopping between your calendar, CRM, and analytics tools

- Human-in-the-loop UIs: agents propose, humans confirm

- Security-first tooling: better sandboxes and safe defaults for developers

- New economic models: agents negotiating purchases or automating transactions

I am cautious about hype and complexity, but the potential for cleaner, safer agent interactions on the web is huge.

Closing Thoughts

The agentic web will not arrive overnight. It is less about replacing humans and more about building systems that let agents assist while keeping humans in control.

If you are a web developer, now is the time to ask: Which actions on my site could an agent help with? How should I expose them? How do I keep it safe?

This future belongs to those who design thoughtfully for both humans and agents.